Scholarly communications is in the midst of a crisis of confidence and capacity. On one side are articles that can’t be validated or reproduced, papermills with unprecedented capacity, rising fraud. On the other side, increasing policy requirements and editorial checks, overburdened staff, attenuated timelines, unsustainable volumes. The researchers’ role in all this is often unclear. As publishing professionals, we often lack insight into our author’s state-of-mind: are they aware of policy? Do they understand how to fulfill it? Are they willing to do what we as journal staff ask of them?

Automation can provide a sustainable way to address these interconnected challenges – but only if it's developed thoughtfully, in a way that complements and supports editorial strengths while reducing the tedious and repetitive aspects of editorial work.

Editorial AI and the editorial office

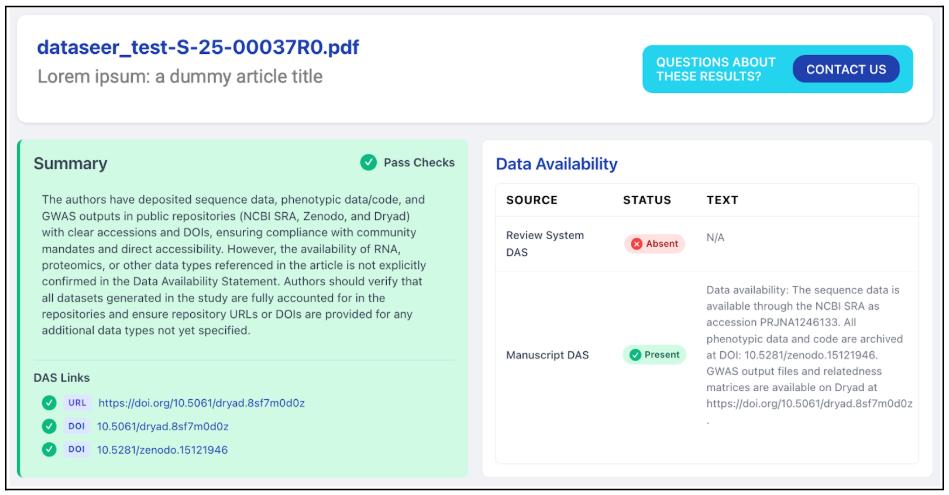

AI excels in areas bounded by rules and logic — the same sorts of tasks that are vulnerable to inconsistent application and human error. If there is a task in the Editorial Office that involves a checklist, a tracking spreadsheet, or a side-by-side comparison, AI can typically help. AI editorial tools have long had the ability to check equations and detect similarities between submitted manuscripts and existing published literature. In the last few years, they’ve been adapted to spot image manipulation and identify hallmarks of generative AI and papermills in narrative writing.More recent AI developments are opening new opportunities with regard to editorial checks. DataSeer SnapShot, for example, works within existing platforms to perform checks that incorporate sophisticated, multi-tiered reasoning pathways, including the use of agentic LLM agents to examine the contents of Supplemental Files. It assesses which policy to apply to a particular manuscript, scans and analyzes the full text, validates links, and verifies the contents files. It even follows a chain of complex if/then logic to triage manuscripts, prepare personalized author-sendback text, and flag special cases for staff review.

AI can check and apply the policies that editors set; it can remix the text they provide to form intelligent responses. It can reduce the burden on staff, but it can’t replace them. The goal here is to free editors from the un-meetable burden of routine checks for ever-rising submissions, so they can focus on issues that require judgement, creativity, vision, expertise, or experience.

AI and the publishing landscape

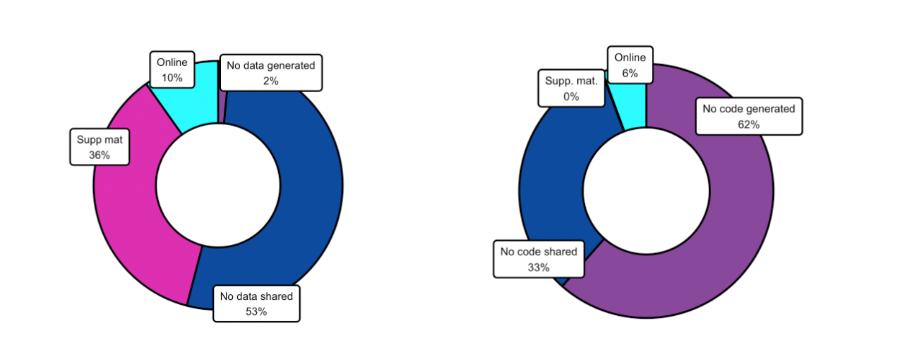

Alongside the daily work of moving manuscripts through the editorial and peer review process is the overarching business of running a publication: what policies should the journal embrace? How can it attract submissions? How can it build and maintain a reputation for quality and integrity?In this realm of business intelligence and researcher behavior, too, AI has a role to play that cannot reasonably be met in any other way. DataSeer’s Open Science Metrics scans thousands of published articles, identifying behaviors such as data sharing, code sharing, personal identifiers, and preprints, and providing breakdowns over time, and by country, journal, publisher, subject area, institution and other factors. Journals use that data to make decisions, set policy, establish meaningful goals and benchmarks, and measure the impact of their efforts over time.

In one example partnering with Silverchair’s Sensus Impact, we used Open Science Metrics to determine whether and at what rates researchers are meeting funder data- and code-sharing requirements. It’s insight that can be used to better understand the impact of grant expedite on scientific progress, adjust funder policy, assess future grant applications, and much more.

The same essential approach might be used to examine any number of research landscape questions — from author-list make-up, to trends in methodological and analytical approaches, to submission and publication patterns and beyond.

Micro and macro: Putting it into practice

AI technologies support editors in both top-level strategy and detailed application, enabling detailed manuscript-level interventions on a massive scale and accurate monitoring of outcomes across journals, publishers, or the entire industry. It’s a feedback loop that facilitates continuous iterative improvement: AI screening provides top-level data that helps shape policy decisions; in-system AI supports policy compliance; top-level data gauges efficacy. As requirements that were once seen as new or challenging become normalized, new and more stringent requirements can be introduced, gradually leading the community down the path to consistently excellent, reproducible research.In one recent example, IOP Publishing announced a data policy change for a subset of its journals, based on insights gleaned through Open Science Metrics. After seeing the growing rate of data sharing among environmental researchers, the publisher strengthened its requirements for relevant journals, confident that the area presents an opportunity to increase FAIR data sharing practices in line with their mission.

Everyone who works with or relies on scholarly research benefits from stronger, more reliable science and increased access to non-article research outputs. By enabling faster, less cumbersome editorial oversight processes, DataSeer’s AI editorial support tools empower journals to achieve those aims and publish gold-standard scholarly research.

Learn more about DataSeer and the Silverchair Universe program here.